Engineering Strategies for AI-Driven Innovation Series - Part 2/3

Expanding Horizons with Cloud Computing and Distributed Systems

This article is Part 2 of the series “Engineering Strategies for AI-Driven Innovation,” where we examine the critical role of cloud computing in the expansion and scaling of AI capabilities.

Previously, in Part 1, we established the groundwork by exploring the fundamentals of AI scalability and the significance of adopting modular design principles.

In this article, we will explore how cloud provides essential services and an environment in which AI can grow and adapt with agility. We will also dive into distributed systems and parallel processing — key factors that enable AI applications to handle large amounts of data and complex calculations across devices.

Leveraging the Cloud for AI Scalability

Cloud Computing: Cloud computing accelerates AI scalability, offering on-demand access to advanced tools and computational power such as GPUs and TPUs without the cost of physical hardware.

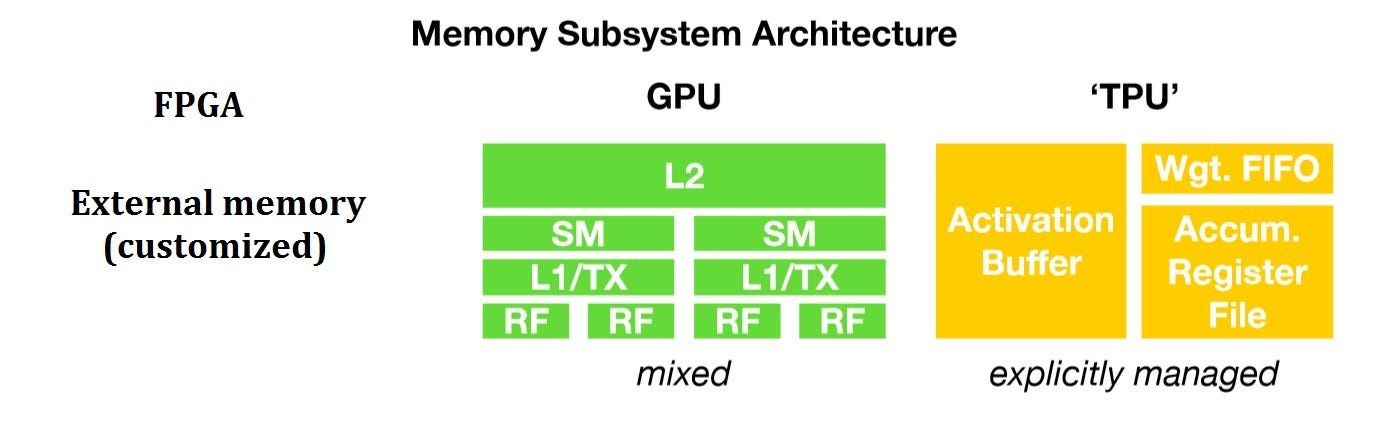

Graphics Processing Units (GPUs): GPUs were originally designed for handling the demands of graphics and video rendering. But recently they have become a popular choice for accelerating AI computations. Their architecture consists of hundreds of cores capable of handling thousands of threads simultaneously and makes them exceptionally well-suited for the parallel processing requirements of machine learning algorithms.

The graphics processing unit, or GPU, has become one of the most important types of computing technology, both for personal and business computing. Designed for parallel processing, the GPU is used in a wide range of applications, including graphics and video rendering. Although they’re best known for their capabilities in gaming, GPUs are becoming more popular for use in creative production and artificial intelligence (AI). [9]

Tensor Processing Unit (TPUs): TPUs are application-specific integrated circuits (ASICs) developed by Google for machine learning. They are specifically designed to accelerate the processing of the TensorFlow framework, which is commonly used in deep learning applications. TPUs are tailored to deliver high throughput for the matrix operations that are prevalent in neural network calculations, providing an even more efficient alternative to GPUs for certain AI tasks.

Google Cloud TPUs are custom-designed AI accelerators, which are optimized for training and inference of large AI models. They are ideal for a variety of use cases, such as chatbots, code generation, media content generation, synthetic speech, vision services, recommendation engines, personalization models, among others. [8]

Enterprises can utilize cloud TPUs to process through massive datasets for real-time analytics. With cloud services, businesses of all sizes can quickly adapt to AI demands, ensuring peak performance and rapid innovation.

You can read more about GPUs and TPUs here.

Elastic Scalability: Cloud computing offers a strategic advantage for AI with its elastic scalability, ensuring resources match business needs dynamically. It allows for expansion during peak periods and cost-effective contraction when demand drops.

For instance, an e-commerce company can scale up its AI to analyze real-time data during Black Friday, then scale down afterwards, optimizing costs automatically. This auto-scaling is innovative, powering AI efficiently and cost-effectively, keeping operations lean and competitive.

You can read more about elastic cloud computing here — Link

Cloud Services for AI: Starting on a journey with cloud computing is essential for AI success. For example, using Amazon EC2 instances with powerful GPUs, or Google Cloud’s AI Platform with TPUs can enhance the performance of your AI workloads.

Your data storage, such as Google Cloud Storage or Amazon S3, can offer rapid access and minimal latency, ensuring data streams efficiently. Security measures, like those provided by Azure Security Center, act as a fortress protecting your valuable data, while tools like AWS Identity and Access Management (IAM) help you navigate the complex world of regulations.

Managed AI services, such as AWS SageMaker or Azure Machine Learning, act as a skilled support team, offering pre-built models for complex tasks and allowing you to speed ahead in innovation. Cloud Service Providers are your allies in this race, providing end-to-end services that free you to concentrate on groundbreaking development and creating disruptive applications.

Choosing the right cloud services, from compute power to managed AI, is a strategic move towards more streamlined development and greater performance. As AI technology evolves, the collaboration with cloud services deepens, leading to a smarter and more connected future driven by cloud capabilities.

Case Study on Arabesque AI: Scaling Autonomous Investing with Cloud Innovation

This case study will examine the methodologies adopted by Arabesque AI, a London-based tech company, to address the challenges associated with data scalability and sensitivity, which are crucial for the efficacy and security of their AI engine. By integrating Google Kubernetes Engine (GKE) and BigQuery, Arabesque AI has surpassed traditional financial models, delivering a global, autonomous investing platform at an unprecedented scale.

Here is the link for detailed case study: Link

Cloud Infrastructure at the Core: Arabesque AI’s deployment on Google Cloud infrastructure has been central to its ability to manage large and live data sets. Cloud services like BigQuery have been instrumental in handling the streaming nature of news sources, which feed into Arabesque AI’s NLP pipelines and financial knowledge graph.

Serverless Solutions for Operational Efficiency: As per the article, Arabesque AI’s adoption of serverless solutions such as App Engine, Firestore, and Firebase has significantly reduced the company’s operational overhead. These services have allowed Arabesque AI to focus on its core competencies in machine learning and finance rather than on operations and infrastructure maintenance.

Advancing Data Pipeline Technologies: Arabesque AI’s development of a pipeline using Apache Beam and Google Cloud Dataflow represents the company’s commitment to state-of-the-art data pipeline technologies. This initiative has enhanced the company’s ability to process data with low latency and high throughput, which is essential for real-time financial forecasting.

Key Takeaways:

The significance of a scalable and secure cloud infrastructure in the processing of sensitive financial data and AI operations.

The strategic employment of serverless cloud solutions to enhance cost-efficiency and operational efficiency.

The integral role played by BigQuery and other Google Cloud services in the management of substantial text data volumes for NLP pipelines.

Arabesque AI’s engagement with the open-source community and its embrace of projects like Apache Beam to refine their data pipeline technologies.

Distributed Systems and Parallel Processing

The extensive processing needs of AI models, when handling large datasets or executing complex computations, can present considerable obstacles. Distributed computing and parallel processing step in at this point, acting as the critical infrastructure that allows AI to scale and address the demands of today’s data-driven world.

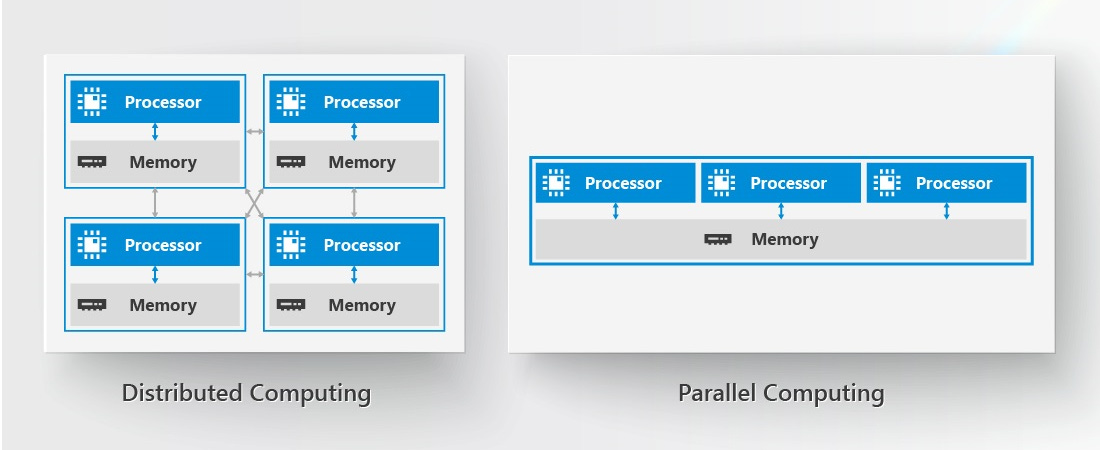

Distributed computing: Distributed computing involves a collection of computers linked together, collaborating to accomplish a shared objective. This method distributes tasks among several systems, enabling more effective handling of substantial AI workloads.

Distributed computing is best for building and deploying powerful applications running across many different users and geographies. Anyone performing a Google search is already using distributed computing. Distributed system architectures have shaped much of what we would call “modern business,” including cloud-based computing, edge computing, and software as a service (SaaS). [10]

Parallel processing: Parallel processing refers to the simultaneous data processing streams that occur within this network, enabling faster computation and real-time analytics.

This computing method is ideal for anything involving complex simulations or modeling. Common applications for it include seismic surveying, computational astrophysics, climate modeling, financial risk management, agricultural estimates, video color correction, medical imaging, drug discovery, and computational fluid dynamics.[10]

A variety of important technologies and frameworks have been developed to support distributed AI, each offering its own advantages. Notably, Apache Spark stands out as a comprehensive analytics engine celebrated for its rapid processing of extensive data tasks. This is achieved through distributing data across a cluster and processing it concurrently, positioning it as an ideal platform for machine learning and real-time analytics.

Another key framework, Hadoop, offers a sturdy and scalable solution for distributed storage and big data processing using the MapReduce programming model. Its distributed file system, HDFS, facilitates data storage across a vast network of standard servers, providing strong reliability and high availability.

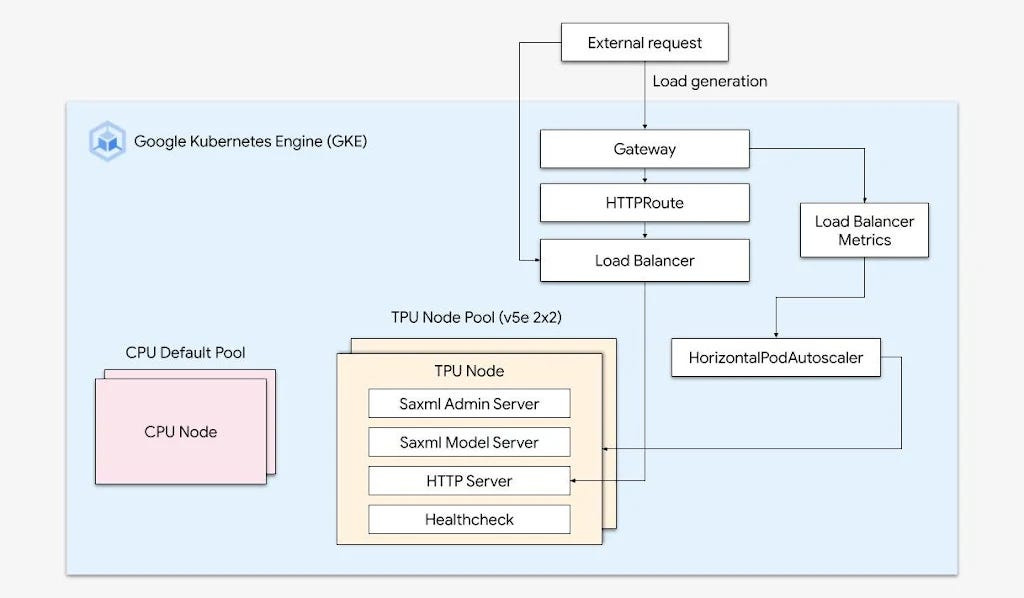

Kubernetes, a more recent addition to the world of distributed computing, has emerged as a cutting-edge solution. It streamlines the process of deploying, scaling, and managing containerized applications, including AI services, throughout a cluster of machines. The platform’s adeptness at managing dynamic workloads renders it particularly suitable for AI models that need to quickly adjust their scale in response to fluctuating demand.

The significance of distributed computing and parallel processing in amplifying AI’s capabilities is paramount. As AI continues to evolve, reliance on these systems and the cloud infrastructure supporting them will increase, establishing a foundation for advanced and accessible AI functionalities for businesses and consumers alike.

Case study: Nuclera runs AlphaFold2 on Vertex AI

DeepMind’s AlphaFold represents a significant breakthrough in the field of computational biology, particularly in solving the protein folding problem.

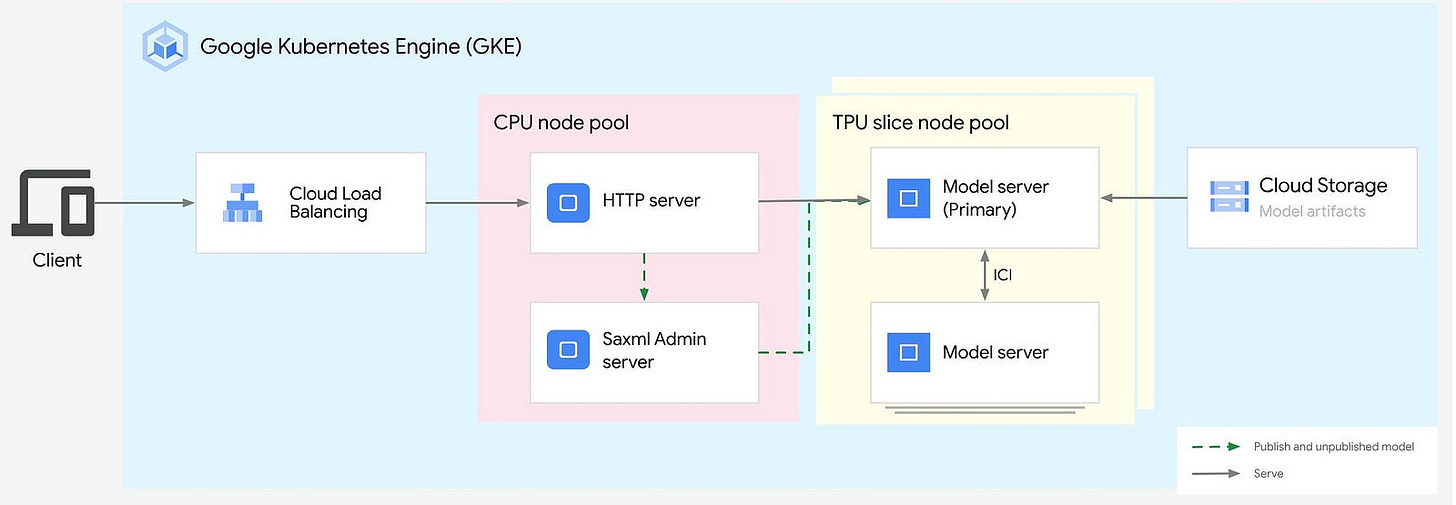

Nuclera, a UK and US-based biotechnology company, is collaborating with Google Cloud to serve the life science community, marrying Nuclera’s rapid protein access benchtop system with Google DeepMind’s pioneering protein structure prediction tool, AlphaFold2 (ref 1) served on Google Cloud’s Vertex AI machine learning platform. [12]

Here’s a more detailed look at the case study and its use of distributed systems and parallel processing on the cloud.

Background: Proteins are essential molecules responsible for a diverse array of functions within living organisms. Gaining insight into the three-dimensional structure of proteins is critical to revealing how they operate and is key for pharmaceutical advancements, as the shape of a protein is closely linked to how it works.

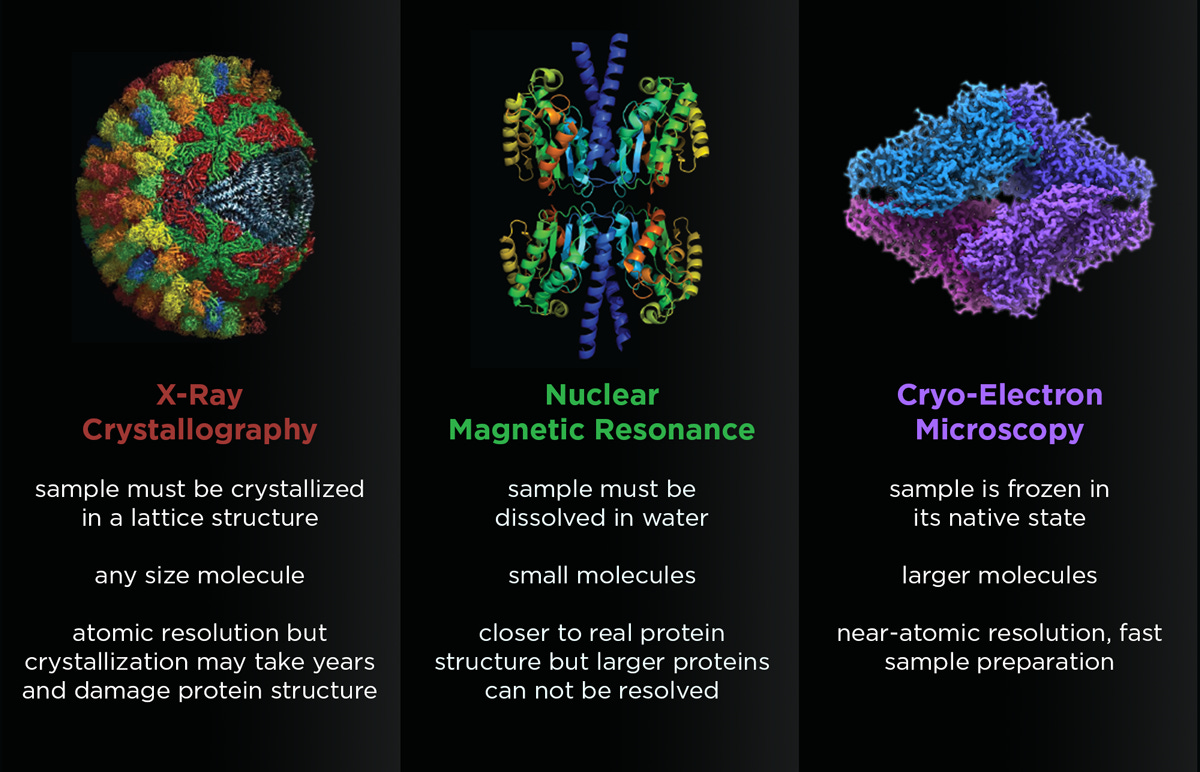

However, the identification of these structures through scientific methods like X-ray crystallography or cryo-electron microscopy is very time-intensive and expensive task.

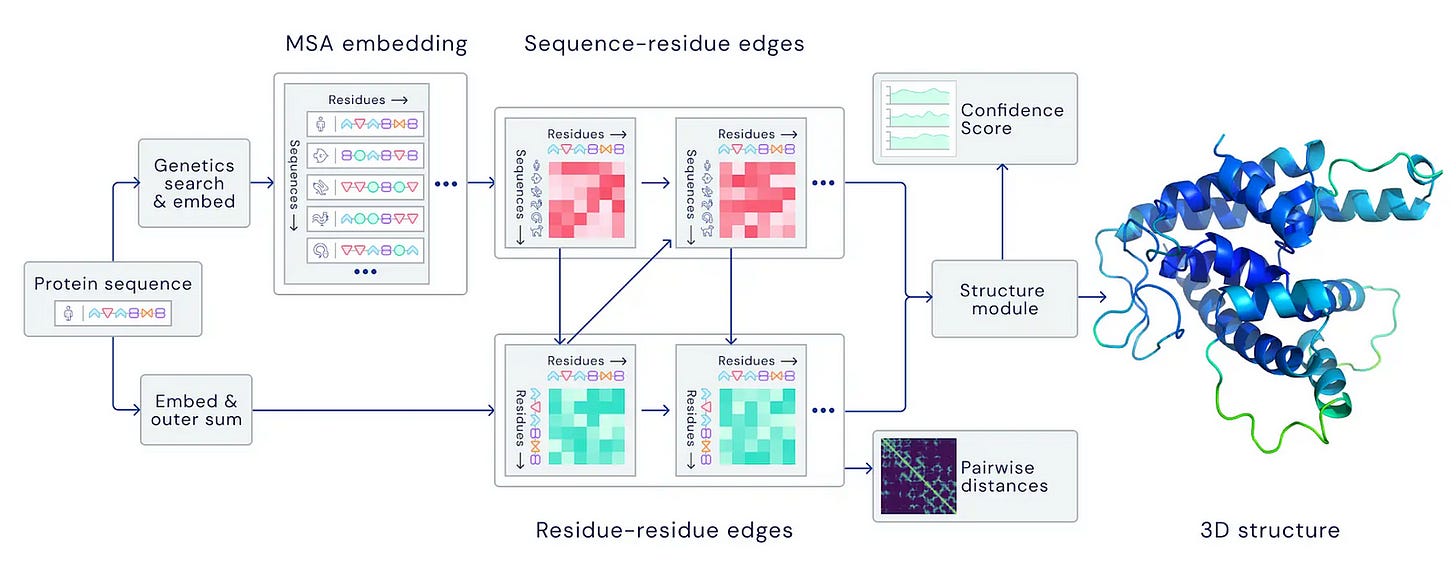

AlphaFold: AlphaFold is an AI system developed by DeepMind that predicts the 3D structure of a protein from its amino acid sequence with remarkable accuracy.

DeepMind and EMBL’s European Bioinformatics Institute (EMBL-EBI) have partnered to create AlphaFold DB to make these predictions freely available to the scientific community.[11]

However, the computational intensity of generating accurate protein structures at scale posed significant challenges. This case study explores how google cloud infrastructure and distributed computing have been leveraged to optimize AlphaFold2’s performance.

Challenges: The primary obstacles included

Extensive computational resources required for protein structure prediction

The need for efficient scaling of inference workflows

Optimization of hardware resource utilization to manage costs

Robust experiment management for concurrent workflows

Cloud Infrastructure Solution with Vertex AI

Vertex AI, a Google Cloud platform, was introduced as a solution to these challenges. It provided:

Workflow Optimization: By parallelizing independent steps, Vertex AI reduced the overall inference time.

Cost-Effective Resource Management: The platform ensured optimal hardware usage by dynamically provisioning and de-provisioning resources.

Experiment Tracking: A flexible system was established to monitor and analyze multiple inference workflows, simplifying the management process.

Distributed Computing Enhancements

Distributed computing played a pivotal role in addressing the computational demands by:

Parallel Processing: Independent tasks were processed simultaneously, significantly speeding up the workflow.

Resource Optimization: Each step was executed on the most suitable hardware, ensuring efficient use of computational power.

Key Takeaways

Cloud Infrastructure Enhances AI: AlphaFold2’s success underscores the importance of cloud platforms like Vertex AI for scalable AI processing.

Parallel Processing Boosts Efficiency: Using distributed computing to run tasks simultaneously speeds up AI workflows.

Cost Savings with Smart Resource Use: Dynamic resource allocation in cloud computing optimizes hardware use and cuts costs.

Simplified Management for Large-Scale AI: Effective tracking systems are critical for managing and analyzing numerous AI experiments.

Innovation Through Strategic Technology Use: Nuclera’s example shows how adopting cloud and distributed computing can lead to industry advancements.

Here are some useful resources for those who are keen to delve deeper into AlphaFold or learn how to utilize it via Google Cloud Platform (GCP)

References:

IBM developer. (n.d.-a). https://developer.ibm.com/learningpaths/get-started-distributed-ai-apis/what-is-distributed-ai/

Doyle, S. (2023, September 19). AI 101: GPU vs. TPU vs. NPU. Backblaze Blog | Cloud Storage & Cloud Backup. https://www.backblaze.com/blog/ai-101-gpu-vs-tpu-vs-npu/

Google. (n.d.). Arabesque AI case study | google cloud. Google. https://cloud.google.com/customers/arabesque-ai

Techniques for training large neural networks. (n.d.). https://openai.com/research/techniques-for-training-large-neural-networks

National Council on Vocational Education. (1991). Solutions. Amazon. https://aws.amazon.com/solutions/guidance/distributed-model-training-on-aws/

Google DeepMind debuts new version of its alphafold model for researchers. SiliconANGLE. (2023, October 31). https://siliconangle.com/2023/10/31/google-deepmind-debuts-new-version-alphafold-model-researchers/

Author , Carlos Outeiral Rubiera , Rubiera, C. O., posts, V. all, & Rubiera, C. O. (2021, July 19). Oxford Protein Informatics Group. https://www.blopig.com/blog/2021/07/alphafold-2-is-here-whats-behind-the-structure-prediction-miracle/

Google. (n.d.-b). Tensor processing units (tpus). Google. https://cloud.google.com/tpu?hl=en

Graphics processing technology has evolved to deliver unique benefits in the world of computing. The latest graphics processing units (GPUs) unlock new possibilities in gaming, content creation. (n.d.). What is a GPU? graphics processing units defined. Intel. https://www.intel.com/content/www/us/en/products/docs/processors/what-is-a-gpu.html

Storage, P. (2022, November 26). Parallel vs. distributed computing: An overview. Pure Storage Blog. https://blog.purestorage.com/purely-informational/parallel-vs-distributed-computing-an-overview/

Database, A. P. S. (n.d.). Alphafold protein structure database. AlphaFold Protein Structure Database. https://alphafold.ebi.ac.uk/

Google. (n.d.-b). Nuclera runs alphafold2 on vertex AI | Google Cloud Blog. Google. https://cloud.google.com/blog/topics/healthcare-life-sciences/nuclera-runs-alphafold2-on-vertex-ai