Engineering Strategies for AI-Driven Innovation Series — Part 1/3

Laying the Foundations with Scalability and Modularity

Introduction

In an era where continuous technological innovations are consistently expanding the horizons of the achievable, artificial intelligence stands at the forefront of this transformative phase. In this series, I will demystify the complex relationship between technological prowess and AI advancements, and explore the core principles of scalable system designs.

This series is designed for curious intellects eager to understand the technological processes underpinning AI-powered innovation. Whether you’re an experienced user looking to hone your engineering strategy or an enthusiastic beginner, these insights will serve as your guide to crafting smart designs in the constantly evolving landscape.

In Part 1, I will delve into the following subjects:

Grasping the Concept of Scalability in AI

Principles of Modular Design with a Case Study on Netflix’s Backend System

Understanding Scalability in AI

In my previous article (Scaling AI in Business : Driving Innovation and Efficiency) , I have explored key strategies and considerations for effectively scaling AI within an organization.

Scaling AI in Business : Driving Innovation and Efficiency

Artificial Intelligence (AI), formerly a concept confined to the imaginative worlds of science fiction and movies, has…medium.com

From an engineering perspective, Scaling Artificial Intelligence (AI) is the capability of the AI system to withstand the fluctuating demand of workloads and handling the user growth. Here are a few reasons why scalability is such an important part of designing AI systems.

Computational Resources: As we start using AI for more complex tasks (for example in healthcare or finance domain), the complexity of AI models will gradually increase which in turn will increase the demand of computational power. The AI system should be able to keep up with the demand while maintaining and improving its performance.

Data Volume: In the real world, large datasets are required to train the machine learning models, and AI systems often rely on such high data volume to generate insights. A scalable AI system can manage and process the increasing data volume efficiently without a decrease in its performance.

Adaptability: Requirements often evolve throughout the project lifecycle, and this often occurs due to unforeseen circumstances. A scalable AI system could adapt to these changes to some extent without significant re-engineering effort (for example, incorporating new data sources).

User Growth: As the user base increases, it becomes crucial for the AI systems to handle the growing number of concurrent users without degrading response time. This has a direct impact on customer satisfaction, which in turn affects revenue.

Principles of Modular Design

Modular design in AI system design emphasizes decomposing the design into distinct, self-contained units, each responsible for a slice of overall business. This design enables concurrent development of units, modules each targeted testing, and easy maintenance. It also enables precision, allowing more efficient allocation of resources to the modules that need them the most.

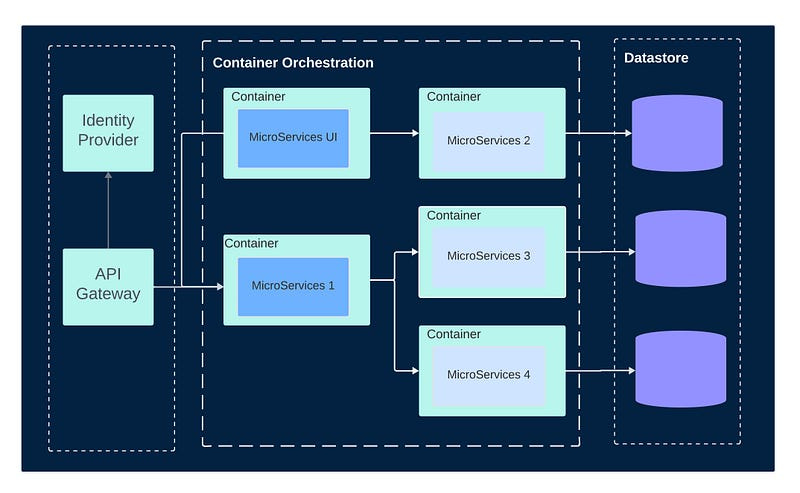

The microservices architecture is a prime example of this approach, where services operate independently, communicate via well-defined APIs, and can be individually scaled to meet demand. This highlights the advantages of using smaller, more manageable parts in complex AI systems.

As depicted in the above diagram, Containerization is often used in transforming traditional monolithic applications into a microservices architecture, which is a design approach to build a single application as a suite of small services, each running in its own process and communicating with lightweight mechanisms, often an HTTP-based API. Containerization is a lightweight form of virtualization that involves encapsulating an application in a container with its own operating environment.

For a deeper dive into Containerization, I suggest checking out below article.

Containerization Explained | IBM

Learn about containerization, and its role and benefits in cloud native application development.www.ibm.com

Imagine you’re at a food court with lots of different food stalls, each serving a specific type of cuisine. You can choose what you want to eat from each stall, creating a meal that’s just right for you.

Now, think of microservices architecture as the digital version of this food court. Instead of one big restaurant that serves everything (which would be like a traditional monolithic software system), you have a collection of smaller, specialized services (the food stalls). Every microservice excels at performing a specific task, like one stall might be awesome at making tacos while another is known for its sushi.

When you use an app built with microservices, it’s just like visiting a food court. Your request for a particular action, like checking your order status or making a payment, goes to the specific microservice that handles that task. By adopting this approach, the app gains greater flexibility and can be updated easily, because you only need to make changes to the specific microservice related to that task, without disturbing the others.

In a nutshell, microservices architecture breaks down a big, complex app into smaller, manageable pieces that work together but are developed and maintained separately. This makes things easier to handle, more efficient, and quicker to improve or fix.

According to best practices, the different services should be loosely coupled, organized around business capabilities, independently deployable, and owned by a single team. If applied correctly, there are multiple advantages to using microservices. [3]

Case Study: Netflix’s Backend system

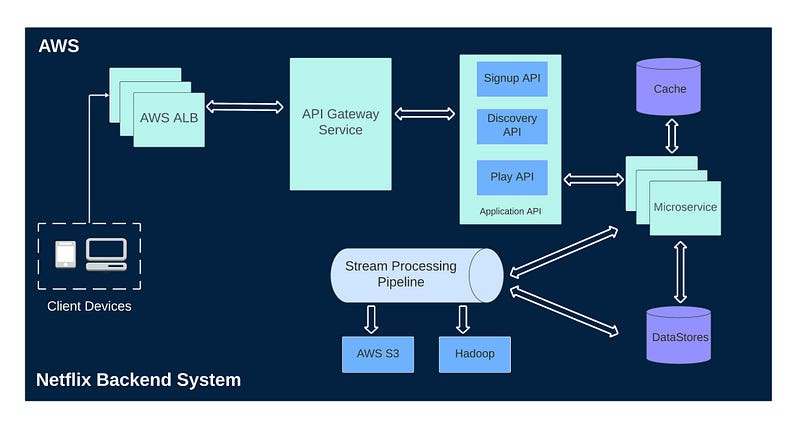

The Netflix backend system architecture is organized as a combination of specific services, often referred to as a microservice architecture.

As shown in the above diagram, this framework forms the basis for all the required APIs for mobile applications and web-based applications. When the request is received, the system calls various microservices to collect the required data. These microservices can also retrieve additional data from other microservices in the network. Once all relevant information has been gathered, a detailed response to the API request is generated and sent back to the original endpoint.

When a client requests to play a video, the request is sent to Netflix’s backend on AWS. Amazon’s Elastic Load Balancer (ELB) directs the traffic to the appropriate services. The request then reaches Netflix’s API Gateway, Zuul, which manages dynamic routing, monitors traffic, and ensures security and failure resilience.

The Application API, the brain of Netflix’s operations, processes various user activities. For a play request, it’s handled by the Play API, which then triggers one or more microservices to execute the task. These microservices, small and usually stateless, communicate with each other and may interact with data stores as needed.

They also stream user activity and other data to the Stream Processing Pipeline for real-time analysis or batch processing for insights. The processed data can then be stored in AWS S3, Hadoop HDFS, Cassandra, or other data systems.

While the focus of this article is on the scalability and modular design principles, I’d like to take a brief detour to discuss how Netflix harnesses the power of deep learning and machine learning to enhance user experiences through its sophisticated recommendation system.

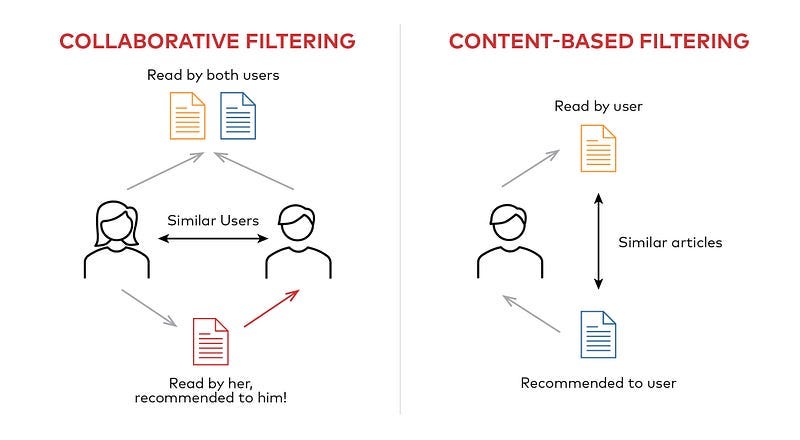

Netflix uses advanced machine learning techniques, including deep learning, to power its recommendation systems. The sophisticated recommendation architecture is a blend of advanced algorithms and human expertise designed to curate a highly personalized viewing experience. The system employs both collaborative filtering and content-based filtering. The goal of these systems is to provide personalized content suggestions to each of its millions of users, enhancing their viewing experience by helping them discover movies and TV shows that align with their preferences.

Collaborative Filtering: It is used to analyze the viewing patterns of users with similar tastes, suggesting content that one might enjoy based on what others like them have watched.

Content-based filtering: This scrutinizes the details of the shows and movies themselves, such as genres, actors, and directors, to recommend titles with similar characteristics.

At the heart of this system is deep learning, which meticulously parses through extensive user data, including watch history, search queries, and even the specific times users prefer certain genres, to continually refine and enhance recommendation accuracy. This AI-driven approach evolves with each interaction, ensuring that the system becomes more attuned to individual preferences over time.

You can read more about Netflix’s Deep Learning for Recommender Systems at Article.

Turning our focus back to the concept of modular design, Netflix’s microservices based backend architecture, plays a pivotal role in their capacity to handle and scale with the demands of their global user base. By segmenting their system into smaller, self-sufficient services, each dedicated to a particular task, Netflix achieves a robust, agile platform that can swiftly adjust to new developments.

For a deeper dive into Netflix’s Backend Architecture, I highly suggest checking out this Article.

Microservices allow for easier scaling since individual components can be adjusted without affecting the entire system. This modularity also means that if one service goes down, the rest of the system remains unaffected, which is crucial for maintaining a reliable streaming service.

The use of microservices also facilitates rapid deployment of new features and updates, helping Netflix to innovate and improve the user experience continuously. The architecture’s agility also supports Netflix’s global reach, enabling efficient content delivery and personalized user experiences across diverse regions and devices.

This concludes Part 1 of the three-part series. Stay tuned for Part 2, where I will delve into Cloud Computing and Distributed Systems.

References

Microservices-based architecture: Scaling enterprise ML models. Sigmoid. (2023, December 19). https://www.sigmoid.com/blogs/microservices-based-architecture-key-to-scaling-enterprise-ml-models/

IBM developer. (n.d.). https://developer.ibm.com/articles/why-should-we-use-microservices-and-containers/

Engdahl, S. (2008). Blogs. Amazon. https://aws.amazon.com/blogs/architecture/lets-architect-architecting-microservices-with-containers/

Cubet. (n.d.). Importance of Microservice Architecture. Cubet Techno Labs. https://cubettech.com/resources/blog/importance-of-microservice-architecture/

Mangla, P. (2023, July 1). Netflix movies and Series recommendation systems. PyImageSearch. https://pyimagesearch.com/2023/07/03/netflix-movies-and-series-recommendation-systems/

Blog, N. T. (2017, April 18). System architectures for personalization and recommendation. Medium. https://netflixtechblog.com/system-architectures-for-personalization-and-recommendation-e081aa94b5d8